Codepocalypse Now: LangChain4j vs. Spring AI

Slide 1

Slide 2

About the Speakers Baruch Sadogursky Viktor Gamov Java Expertise Head of Developer Relations at TuxCare. Principal Developer Advocate at Combined decades of Java development Confluent. and advocacy experience. • • @jbaruch • @gamussa • Co-author of ”Liquid Software” and • Co-author of “Kafka in Action” “DevOps Tools for Java Developers” Speaking at major tech conferences worldwide • Leaders in AI integration with Java ecosystems

Slide 3

Shownotes Access all presentation materials, code examples, and references at our resource hubs: Baruch’s Shownotes Viktor’s Shownotes All You Need! Comprehensive presentation materials Same, just under different URL Includes slides, video recording, and all and coding examples code references speaking.jbaru.ch @jbaruch speaking.gamov.io @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 4

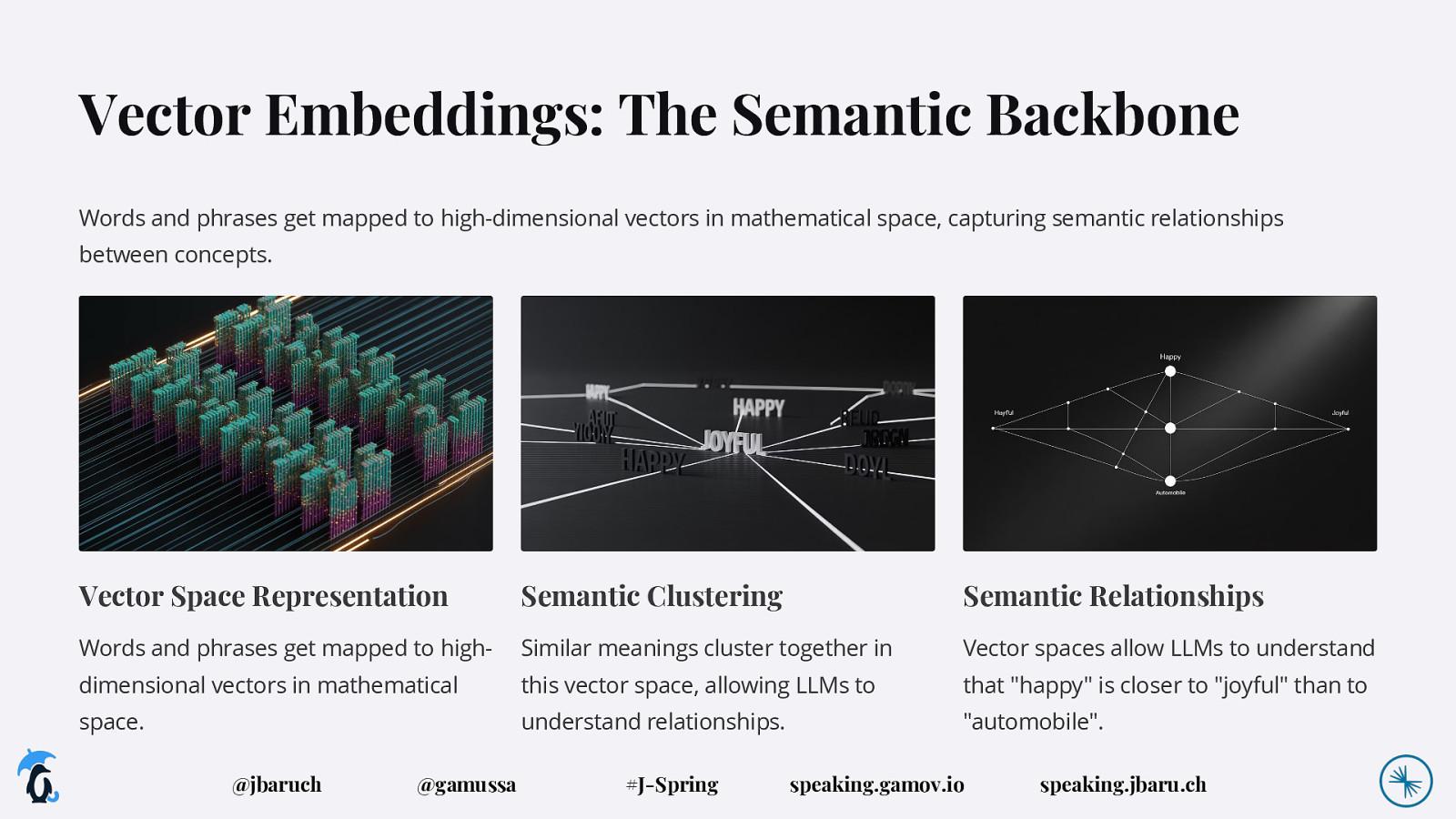

Vector Embeddings: The Semantic Backbone Words and phrases get mapped to high-dimensional vectors in mathematical space, capturing semantic relationships between concepts. Vector Space Representation Semantic Clustering Semantic Relationships Words and phrases get mapped to high- Similar meanings cluster together in Vector spaces allow LLMs to understand dimensional vectors in mathematical this vector space, allowing LLMs to that “happy” is closer to “joyful” than to space. understand relationships. “automobile”. @jbaruch @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 5

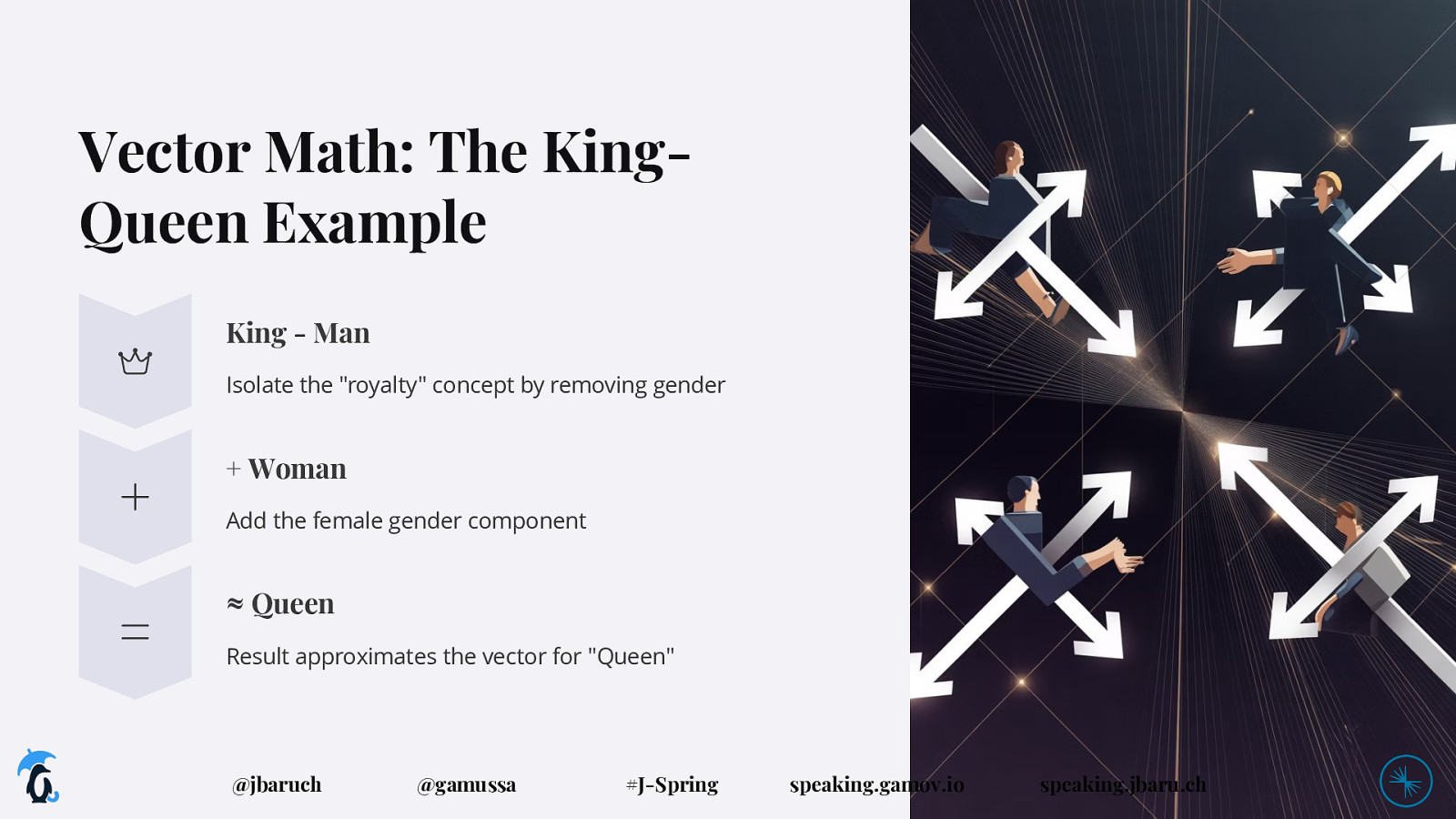

Vector Math: The KingQueen Example King - Man Isolate the “royalty” concept by removing gender

- Woman Add the female gender component ≈ Queen Result approximates the vector for “Queen” @jbaruch @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 6

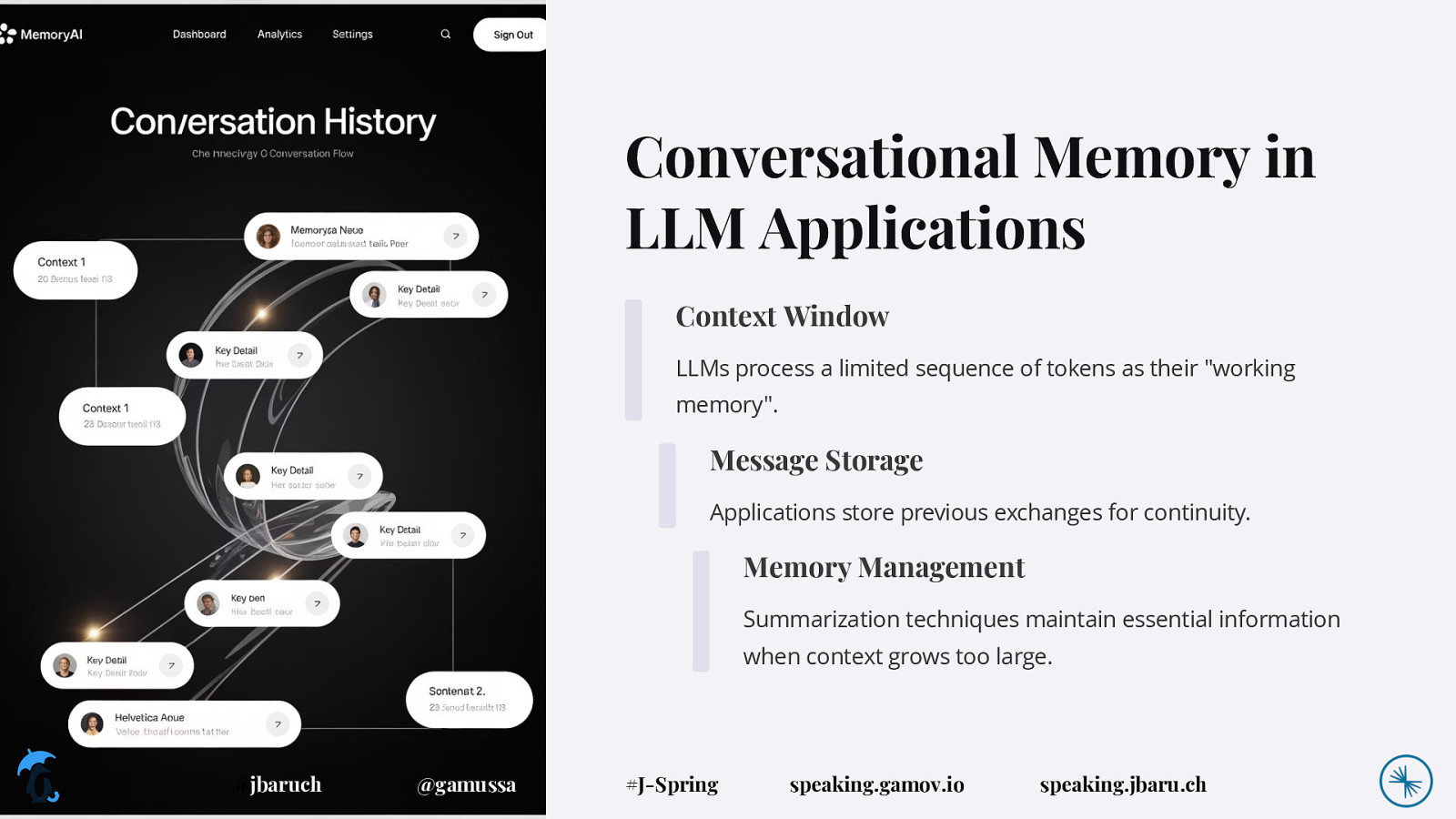

Conversational Memory in LLM Applications Context Window LLMs process a limited sequence of tokens as their “working memory”. Message Storage Applications store previous exchanges for continuity. Memory Management Summarization techniques maintain essential information when context grows too large. @jbaruch @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 7

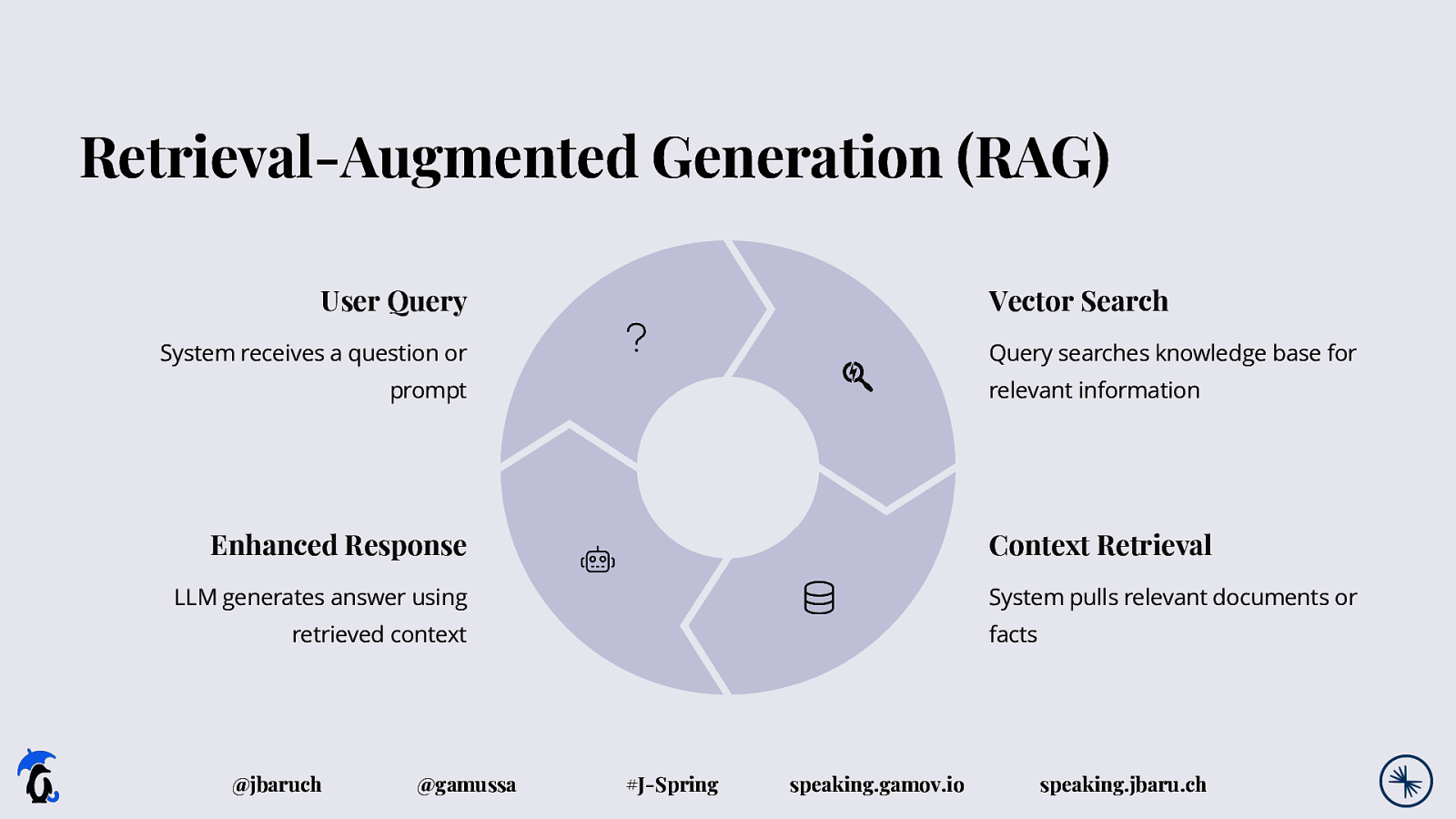

Retrieval-Augmented Generation (RAG) User Query Vector Search System receives a question or Query searches knowledge base for prompt relevant information Enhanced Response Context Retrieval LLM generates answer using System pulls relevant documents or retrieved context @jbaruch @gamussa facts #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 8

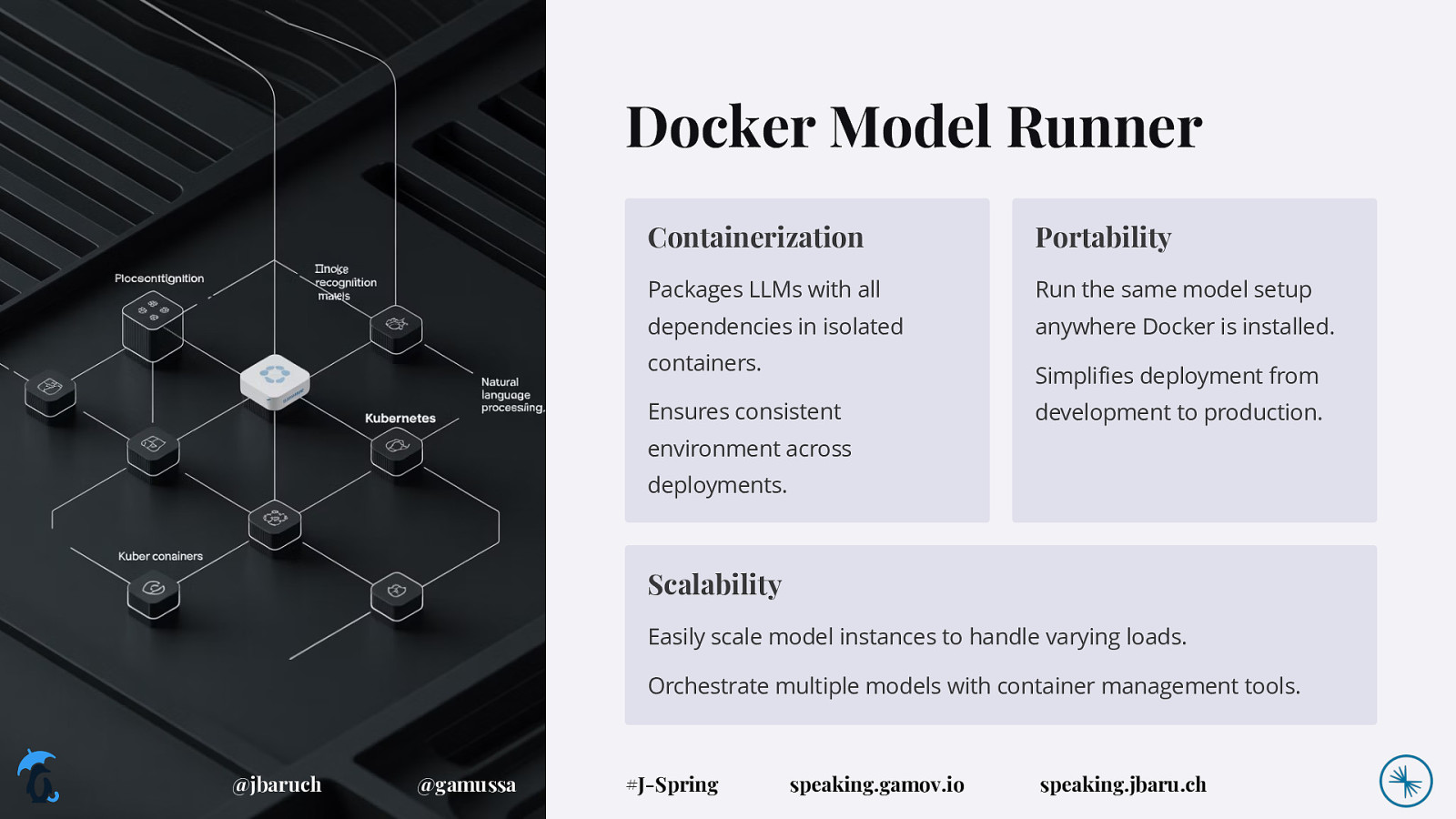

Docker Model Runner Containerization Portability Packages LLMs with all Run the same model setup dependencies in isolated anywhere Docker is installed. containers. Simplifies deployment from Ensures consistent development to production. environment across deployments. Scalability Easily scale model instances to handle varying loads. Orchestrate multiple models with container management tools. @jbaruch @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 9

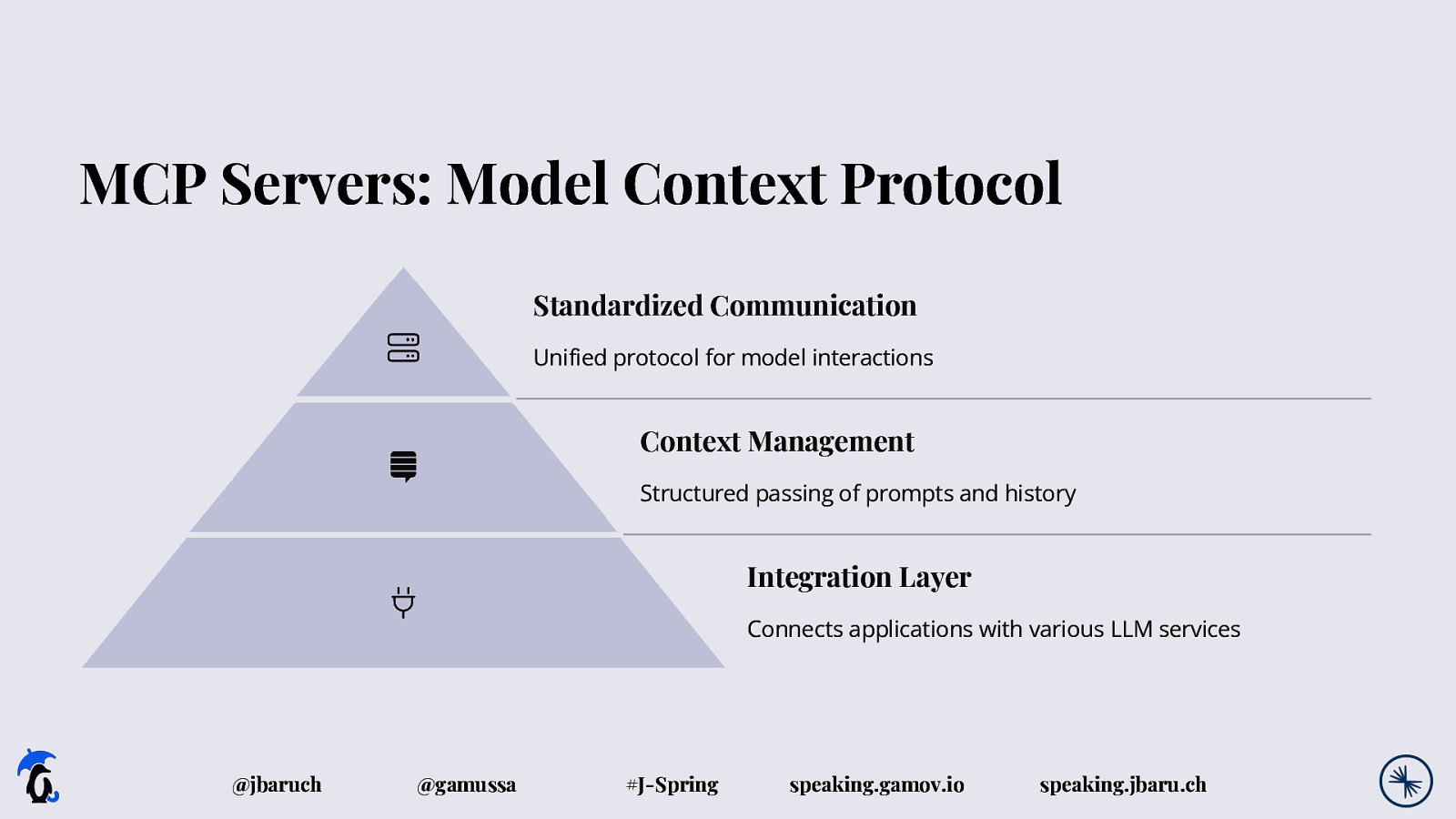

MCP Servers: Model Context Protocol Standardized Communication Unified protocol for model interactions Context Management Structured passing of prompts and history Integration Layer Connects applications with various LLM services @jbaruch @gamussa #J-Spring speaking.gamov.io speaking.jbaru.ch

Slide 10

Thank You! • @jbaruch • @gamussa • #j-spring • speaking.jbaru.ch • speaking.gamov.io